It’s safe to say that the COVID-19 pandemic has changed the world.

With roughly half the world’s population confined in their homes and a looming recession, the likes of which we’ve never seen, this is uncharted territory.

As the world prepares to slowly open up again, many initiatives are being undertaken to help manage the health crisis.

One of the initiatives that have generated a lot of chatter is the rollout of contact tracing apps or exposure notification apps. The latter expression was concocted by Apple and Google’s marketing teams to make the initiative appear less privacy-invasive.

But what are the privacy concerns with this approach? And, more fundamentally, will this approach be useful? Will it work?

We’ll attempt to answer these questions in this article.

The History

Your news feeds are probably, like mine, flooded with COVID-19 news. So I won’t get into the specifics – which you probably already know.

We’ve now apparently passed the peak of the first wave of COVID-19 (and hopefully the last, but it’s too early to tell). And governments around the world are preparing their deconfinement strategies.

In the midst of all this, on April 10th, Apple and Google announced a joint initiative to produce a framework, that runs on both Apple and Android smartphones.

They claim this joint API would enable COVID-19 contact tracing, in a way that respects privacy.

How Does It Work?

Basically, Apple and Google’s approach to contact tracing works as follows:

The framework uses Bluetooth rather than GPS for contact tracing.

To use the functionality, you must download the official app produced by your county’s public health agencies. Without the app, you cannot use the API.

And only official apps, from proper public health authorities, can access the API.

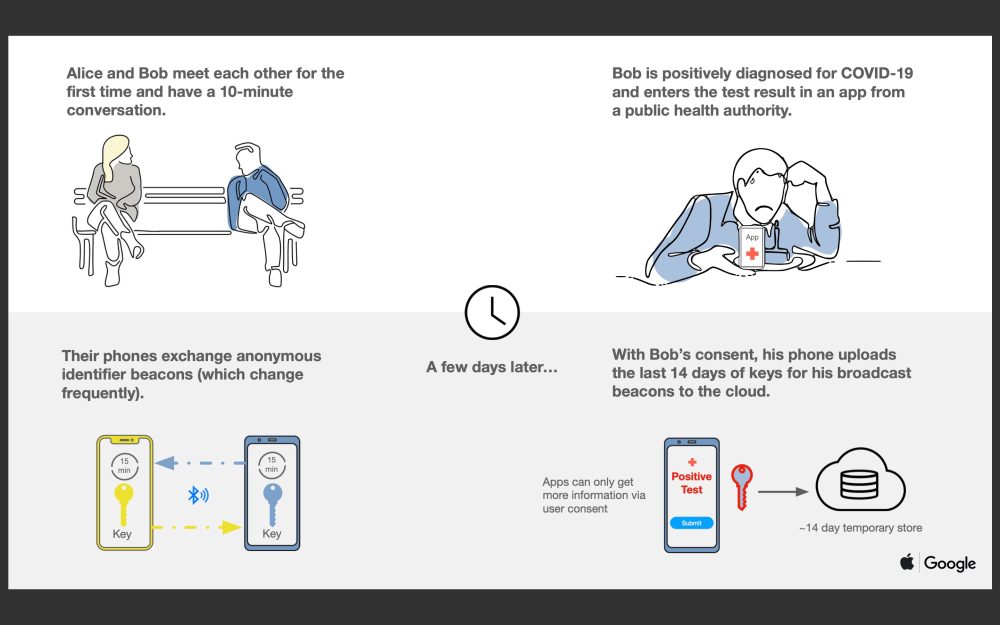

So, once the API is enabled and the relevant app is downloaded, the phone starts broadcasting Bluetooth signals to other nearby enabled phones. At the same time, the phone also listens for incoming Bluetooth signals from other enabled phones.

When two enabled phones come into close contact with one another, the phones exchange anonymous Bluetooth identifiers, derived from rotating keys that are on-device.

If a user of the app is diagnosed with COVID-19, they can inform the app of their status.

The app then uploads their last two weeks of keys to a server managed by the relevant public health authorities.

The server then derives the identifiers from the uploaded keys and sends them out to the other participating phones.

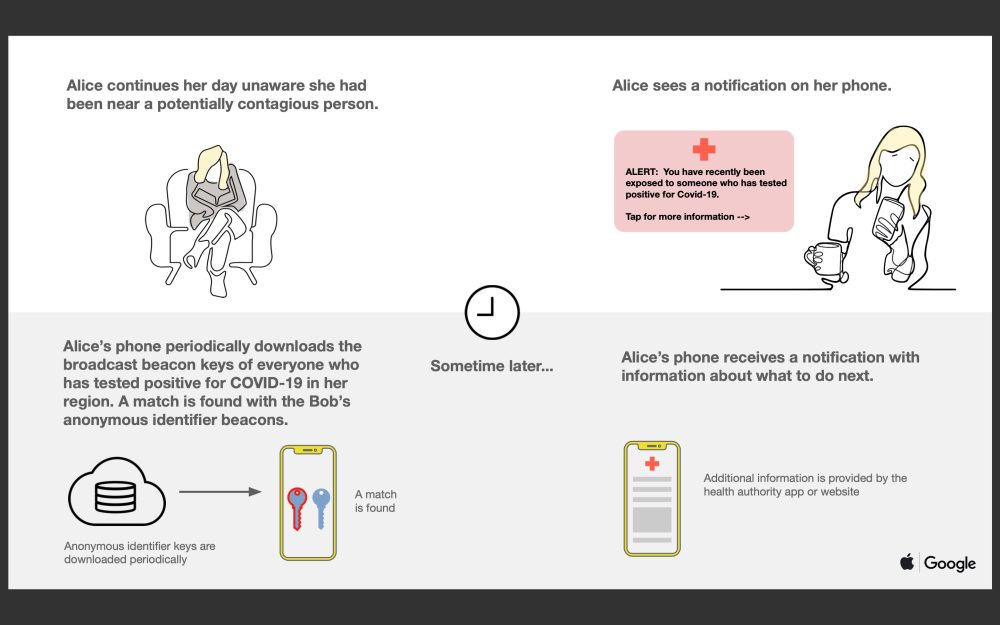

If another phone finds a match with the identifiers on their device, the phone’s owner is notified that they’ve been in contact with someone who has potentially contracted COVID-19.

That’s how it works in a nutshell. And when compared to using location information (GPS), this API has definite privacy advantages.

- Requires explicit user consent;

- Doesn’t collect personally identifiable information or location data;

- The list of proximity contact stays on your phone

- Diagnosed people are not identified to other users, Google, or Apple

- Pledge that it will be used only for contact tracing by public health authorities during the COVID-19 pandemic. Apple and Google have the technical ability to “turn off” the feature, on a regional basis.

This is good. But the Devil is in the details. Let’s look at this in more detail.

Is It Really Privacy-Preserving?

On their blog, the EFF provides a privacy analysis of the proposed API. They list three major privacy concerns they have with Apple and Google’s approach.

Deducing Disease

EFF is concerned that a system that references diagnosis keys against Bluetooth identifiers on a device, can enable people to figure out which of the people they encountered is infected.

“[…] [I]f you have a contact with a friend, and your friend reports that they are infected, you could use your own device’s contact log to learn that they are sick. Taken to an extreme, bad actors could collect RPIDs [Bluetooth identifiers] en masse, connect them to identities using face recognition or other tech, and create a database of who’s infected.”

This would indeed make the app much less anonymous.

Keys Rather Than Identifiers

By having infected users upload their keys, rather than simply the relevant Bluetooth identifiers (which are anonymous), the API exposes them to linkage attacks.

Suppose someone were to obtain a stash of Bluetooth identifiers and the rotating keys, through a rogue app or static Bluetooth beacons set up in public spaces.

With those two data points, they could link together all of the Bluetooth identifiers derived from those keys.

This can expose a person’s daily routine: where they work, live, worship, etc.

This would not be possible if an infected user simply uploaded the relevant identifiers for the last two weeks prior to their diagnosis. But, the API uploads the user’s keys rather than just the list of identifiers.

This can be mitigated, by shortening the rotation time of the keys. Currently, the keys rotate every 24 hours.

Another Permanent Diary...?

All the collected and generated proximity data stays on your phone unless you knowingly send it to the relevant public health authorities.

This is good.

But it also means that your phone is now carrying yet another detailed log of your movements (past two weeks), complete with who you’ve come in contact with. That’s rather invasive in itself.

Also, law enforcement regularly access data stored on smartphones during investigations – whether it’s legitimate or not. They may well try and access this data for purposes unrelated to the pandemic.

And this proximity data is rather personal.

As EFF point out in their post:

"Anyone who has access to the proximity app data from two users’ phones will be able to see whether, and on what days, they have logged contacts with each other".

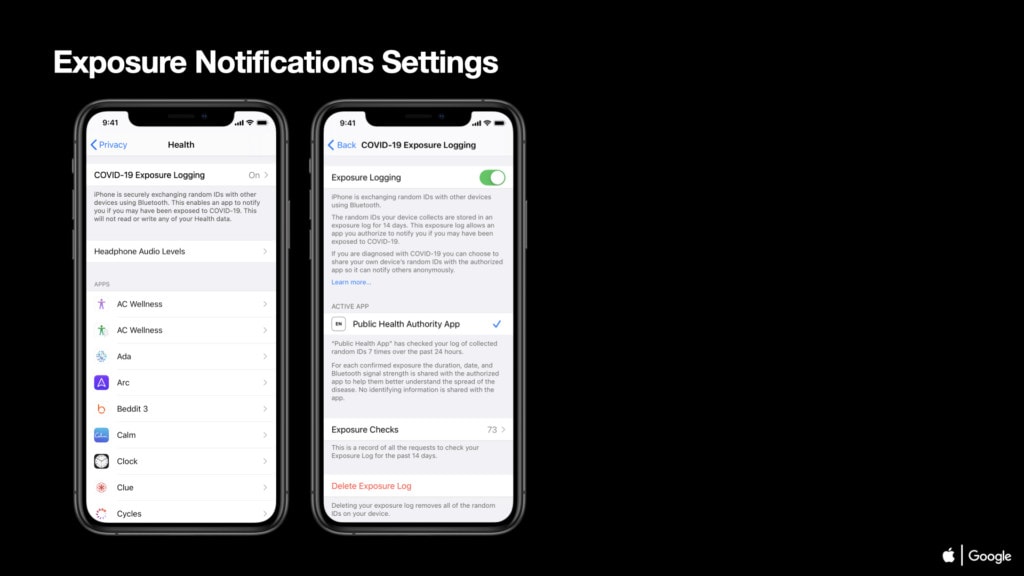

When the EFF wrote their post, it wasn’t clear whether or not it was possible to delete the collected data from your phone.

Apple and Google have since release code samples for their joint API. And it does appear to allow users to delete the generated identifiers from their device.

We definitely like having a ‘Delete’ button. But does it also delete the keys? I guess we’ll learn more in the coming days.

Now, this approach is certainly better than an approach using location servicesand a centralized server for storing identifiers and keys. But deleting your identifiers is a manual process that many will forget about.

Perhaps having the app delete all of its data as soon as the API is disabled would be a safer option.

And, of course, all this data should be protected with strong encryption.

It’s actually quite rare for privacy concerns to be front and center in the development of an app. But this is one case where getting these details right may make or break the initiative.

Which brings me to my next point: will it work?

Is Contact Tracing Useful?

Obviously, for such an approach to work, wide adoption is needed. And that’s far from guaranteed.

Health data is generally treated as being very sensitive. And here we have two of the world’s largest technology companies building a tool to enable us to share that data.

As privacy-preserving as it may be, it’s a big ask. And many people will simply not go for it.

Also, this approach relies on mobile phones and assumes that 1 phone = 1 human, which is NOT the case. Phones can be left at home, turned off, run out of battery, etc.

The API broadcasts roughly every five minutes. Aside from sucking your battery, it will also potentially miss contacts that occur in the interval.

There will inevitably millions of contacts that won’t be registered by the API.

Another point about adoption is that the elderly and low-income households have the lowest adoption rate for mobile phones. And paradoxically, it is these demographic groups that are most at risk from complications due to COVID-19.

This is a huge gap in the system.

And then, of course, there are the questions surrounding false positives and false negatives that these apps will undoubtedly need to grapple with.

False Positives

These apps will inevitably need to define what counts as contact – this will be time and distance. Say six feet for over ten minutes.

A false positive will be contact that conforms to the time and distance criteria, but that doesn’t lead to transmission. And the time and distance criteria are obviously not enough to confirm transmission.

So these false positives will happen in many circumstances, because the app won’t be aware of walls or the fact that the people in contact are both wearing masks, for example.

And not every contact, even without barriers or masks will result in transmission.

False Negatives

False negatives, inversely, is the rate at which the app fails to register contact when an infection actually does occur.

This will happen because of errors in the app’s proximity systems. It will happen because people not using the app will continue to infect others.

And it will happen because transmission can occur outside of precisely defined contact. The virus can sometimes travel further and you can also be infected through contact with objects and surfaces.

As Bruce Schneier, privacy expert and fellow at the Berkman Klein Center for Internet & Society at Harvard University, puts it:

“The end result is an app that doesn't work. People will post their bad experiences on social media, and people will read those posts and realize that the app is not to be trusted. That loss of trust is even worse than having no app at all. It has nothing to do with privacy concerns. The idea that contact tracing can be done with an app, and not human health professionals, is just plain dumb.”

Our Take...

While there is a lot of good in the design of Apple and Google’s joint API, there are still many security and privacy holes left open.

And even if it were perfect (which no app will never be), we’re still confronted with the usefulness problem.

It just seems like this approach won’t help us much. Even in the best-case scenarios, it will be difficult to trust the data coming from these apps. And these apps will ignore the most vulnerable communities.

An app is a poor substitute for accurate testing by medical professionals.

So, in case you’re wondering, no, I won’t be installing this on my phone. But that’s me. I encourage you to read as much as you can about this and make your own decisions.

As always, and perhaps more significantly this time around, stay safe.

Apple and Google’s COVID-19 Exposure Notification API: What You Should Know

By Marc Dahan

Last updated: May 5, 2020